Please check my Google Scholar profile for my publications.

Here you will find only an incomplete and not up to date list of some selected publications:

Watch: A Validation Framework and Language for Tool Qualification

Luiz Cordeiro¹, Christian Becker¹, Markus Pielmeier¹ & Julian Amann¹, In: Trapp, M., Schoitsch, E., Guiochet, J., Bitsch, F. (eds) Computer Safety, Reliability, and Security. SAFECOMP 2022 Workshops.

Abstract

ISO 26262:2018 is one of the most important standards for functional safety within the automotive domain. One crucial aspect of the standard is software tool qualification. The goal of software tool qualification is to ensure that none of the used software tools (compilers, code generators, etc.) is introducing a safety-related malfunctional behavior. Therefore, for each tool a so-called tool confidence level (TCL) is determined, and based on this appropriate tool qualification methods are chosen such as the validation of a software tool. As an input for the TCL determination all relevant use cases of a tool must be considered.

In the context of the development of advanced driver-assistance systems (ADAS) such as automated driving, the TCL determination can be already a very complex task. Our internal ADAS software stack consists of more than 23 million source lines of code, with close to 2000 developers working on this stack in parallel triggering more than 20000 CI (continuous integration) builds per day. Since we are following an agile development methodology our toolchain can change every day.

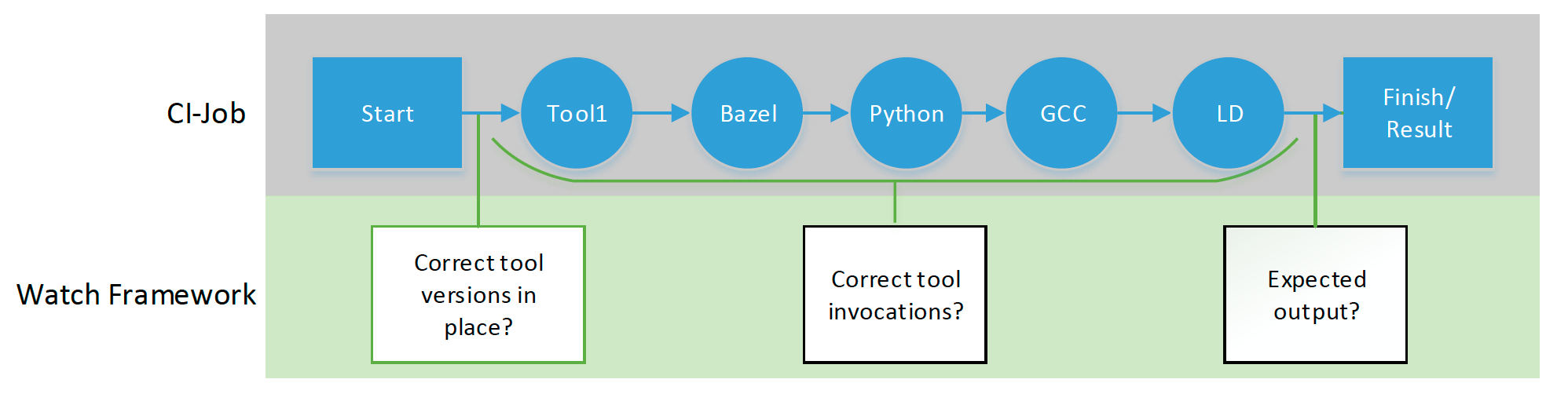

To be able to withstand these continuous changes we developed Watch. Watch is a software validation framework and domain-specific language to support tool qualification for automotive functional safety. Watch helps to identify all used tools within our toolchain, ensures that the correct version of a tool has been used, and allows us to define in a formal way how a tool can be used within our environment. Furthermore, it offers the possibility to formulate qualification toolkits.

An Approach to Describe Arbitrary Transition Curves in an IFC Based Alignment Product Data Model

Julian Amann¹, Matthias Flurl¹, Javier R. Jubierre¹, André Borrmann¹, In: Proc. of the 2014 International Conference on Computing in Civil and Building Engineering, ISBN 978-0-7844-1361-6, Orlando, USA, 2014

¹Technische Universität München

Abstract

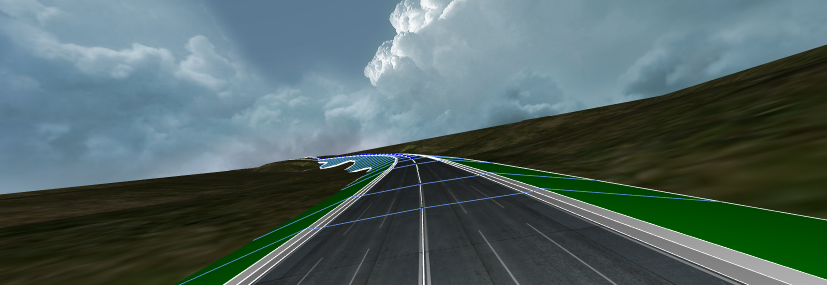

Open standards for infrastructure based on IFC (Industry Foundation Classes) are mainly developed by the openINFRA initiative of the buildingSMART organization. Recently, several proposals for alignment models emerged with the development of the upcoming IFC 5 standard that in particular targets infrastructure projects such as roads, bridges and tunnel buildings. A common drawback of all these proposals is their limited description of arbitrary transition curves. For instance, in all proposed alignment models there are some missing types of transition curves, or different parameters are suggested to describe a certain transition curve type. Designing a neutral data format that satisfies all stakeholders in an international context is therefore difficult. A novel approach to describe transition curves based on the so-called IFCPL (Industry Foundation Classes Programming Language) is described and its integration into an IFC based alignment model is shown to avoid these problems.

Using Image Quality Assessment to Test Rendering Algorithms

Julian Amann¹, Bastian Weber¹, Charles A. Wüthrich¹, WSCG Full papers proceedings, ISBN 978-80-86943-74-9, pp. 205-214, Union Agency, 2013

Abstract

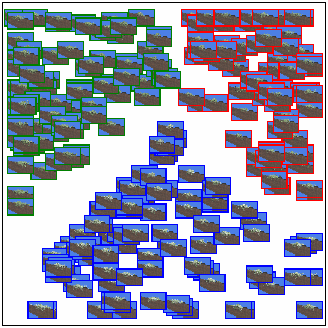

Testing rendering algorithms is time intensive. New renderings have to be compared to reference renderings whenever a change is introduced into the render system. To speed up this test process, unit testing can be applied. But only detecting differences at the pixel level is not enough. For instance, in the context of games or scientific visualization, we are often faced with random procedurally generated geometry like e.g. particle systems, waving water, plants or molecules. Therefore, a more sophisticated approach than a pixelwise comparison is needed. We propose a Smart Image Quality Assessment Algorithm (SIQA) based on a self-organizing map which can handle random scene elements. We compare our method with traditional image quality assessment methods like Mean Squared Error (MSE), Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Maps (SSIM). The proposed method helps to prevent the detection of images being categorized wrongly as correct or having errors, and ultimately helps saving time and increases productivity in the context of a test-driven development process for rendering algorithms.

Error Metrics for Smart Image Refinement

Julian Amann¹, Matthäus G. Chajdas¹, Rüdiger Westermann¹, Journal of WSCG, Vol.20, No.1-3, pp. 127-135, ISSN 1213-6972, Union Agency, 2012

¹Technische Universität München

Abstract

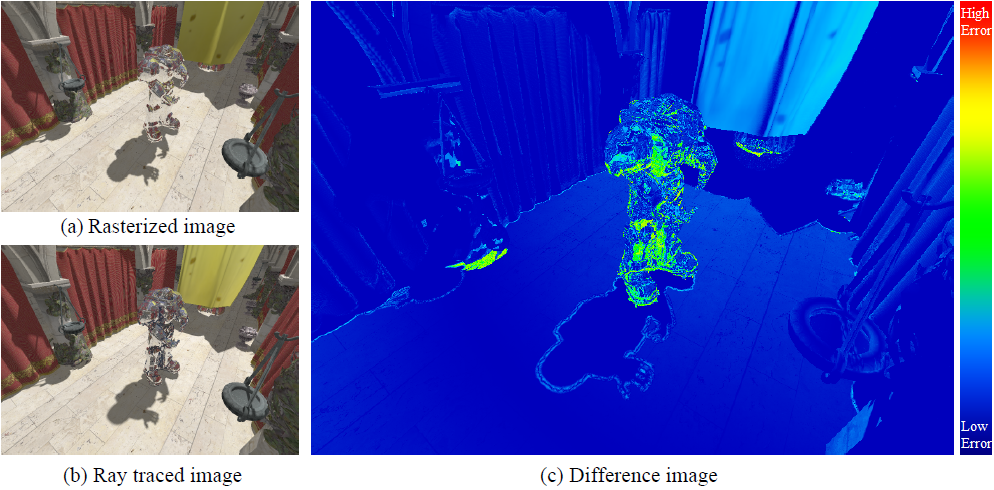

Scanline rasterization is still the dominating approach in real-time rendering. For performance reasons, realtime ray tracing is only used in special applications. However, ray tracing computes better shadows, reflections, refractions, depth-of-field and various other visual effects, which are hard to achieve with a scanline rasterizer. A hybrid rendering approach benefits from the high performance of a rasterizer and the quality of a ray tracer. In this work, a GPU-based hybrid rasterization and ray tracing system that supports reflections, depth-of-field and shadows is introduced. The system estimates the quality improvement that a ray tracer could achieve in comparison to a rasterization based approach. Afterwards, regions of the rasterized image with a high estimated quality improvement index are refined by ray tracing.

Diploma Thesis: Echtzeit-Schattenwurf in RTT DeltaGen

Julian Amann¹, Diploma Thesis, 2009

¹University of Applied Sciences Landshut/Realtime Technology AG

Abstract

This diploma thesis deals with shadows in computer graphics, in particular with the Shadow Mapping method and the extensions of this method. Shadows enable a viewer to better understand spatial relationships, increase realism and last but not least contribute to the atmospheric effect of a scene. The aim of this diploma thesis is to evaluate the visual quality of online shadows algorithms of RTT DeltaGen (a high-end visualization software) without degrading the performance of the application. application. The online shadows are based on the shadow mapping algorithm. Disadvantages of this method include discontinuous shadow transitions or block artifacts. In numerous publications, possible improvements to the weak points of shadow mapping have been presented. In the context of the diploma thesis the strengths, such as the reduction of aliasing effects or soft shadow gradients, as well as weaknesses of the different approaches are analyzed and examined regarding their combinability of the different approaches. After an evaluation of the different methods regarding their suitability for RTT DeltaGen, a selection is made for an algorithm to be implemented. In the necessary changes to the software architecture and problems with the integration of the selected and problems with the integration of the selected algorithm, e.g. the extension of the underlying underlying scene graph by a separate camera object, are described. The visual quality improvements of the shadow achieved by the chosen approach, such as steady shadow transitions or avoidance of erroneous self-shadowing, are finally presented.